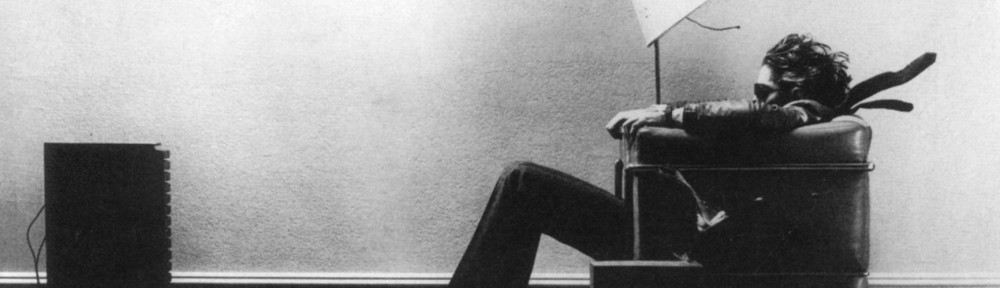

What you see above is still, in my opinion, the best way to do this. Those pedals, in that order, with that amplifier, provide enough sonic options for me to do pretty much anything I want to with an electric guitar. There’ a lot I’m not capable of, in terms of both technique and technology, but right now my focus is on documenting what I can do, instead of encroaching into the territory of what I can’t. Quite simply, i want to be able to come home, plug a guitar into that set-up, and, should the moment so strike me, press the record button and have a document of that evening’s inspiration.

Now, of course I have the capacity to do just that with the present technology. Even if I don’t want to set up the computer and the A/D converter and Logic (or even GarageBand), I can just do like FBdN and record right to iPhone OS. Or I could use the old minidisc. I could even go right to an old cassette multi-track.

The problem is TIME.

Not that these things take time, but rather that I want to be able to take whatever I’m doing and bring it back into a digital audio workstation later. That’s no problem, unless there are going to be loops. And there are always loops. If a song sketch is off by even a tenth of a second, that means that after a minute, the deviation is 6 seconds – that’s not music, that’s chaos.

So the question now is: how can I get a master clock associated with an impromptu recording of a song sketch? Playing along with a metronome is not enough. Whatever device records the initial jam has to associate a bpm with that snippet, which can then control other music. I’m not sure this is possible.

The more sensible approach would be to take the impromptu performance as inspiration and then properly construct music around that, starting with the beat and locking in the time. Of course, this means recreating that moment of creativity in a very sterile setting. Much less fun and much more time consuming.

All of this would be a piece of cake if I (a) had no day job and (b) were fabulously wealthy.